Scribetech has partnered with the NHS for over two decades, delivering clinical documentation services through teams in Manchester and Bangalore. As advancements in speech recognition, AI, and natural language processing (NLP) accelerated, Scribetech saw the opportunity to create a voice-AI solution that could dramatically improve efficiency and accuracy—while remaining cost-effective.

Beyond streamlining documentation, this solution also supported the broader goal of digitising clinical data—an essential step toward improving patient care and optimizing healthcare operations. From the earliest stages, we collaborated closely with Scribetech’s founding team, acting as strategic partners in product development while also shaping the brand identity, user experience, and multi-platform interfaces for their voice-based platform, Augnito.

Expertise

- UX Research

- Systems Thinking

- Product Strategy

- UX/UI Design

- Branding

OUTCOMES

- 200 online Radiology licences sold in the first 2 weeks of release.

- Doctors have the flexibility to work while on the move, since all data was synced to the cloud.

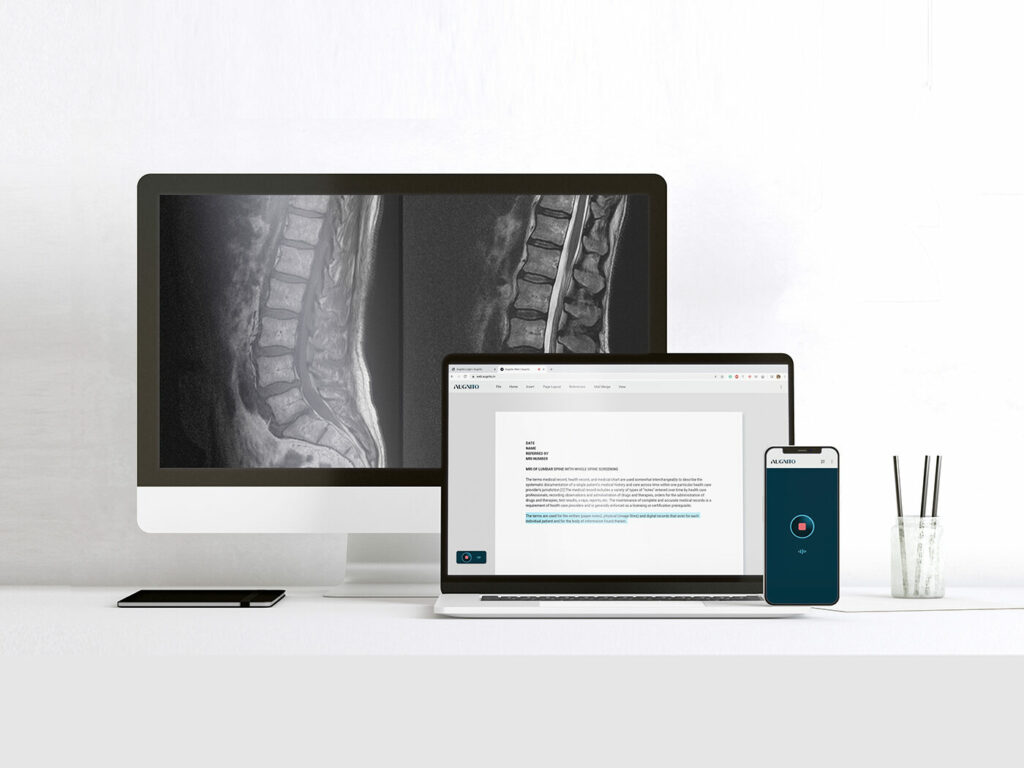

- Multimodal user experience blending voice and screen interfaces across devices

- Comprehensive brand identity and UI kits for the Augnito app and widgets

APPROACH

One of the advantages for Scribetech was a large existing database of medical voice recordings, representing doctors from all over the world – hence with varying accents – who worked in the UK. Existing products were prohibitively expensive for most doctors, hence the plan was to use this data to develop an accessible, error-free medical dictation tool.

In order to make Augnito as accessible as possible, we developed an integrated solution that required no additional hardware beyond what doctors might already be using. Starting from the brand identity, we designed multiple UIs for all the different user stories that could occur across devices, including chat and customer support.

Certain settings posed unique challenges for voice recording. For example, ENT exams tend to involve movement from one screening tool to another, sometimes to different rooms as well. This would potentially lead to inconsistent audio quality, assuming that the recording device was placed in a fixed position in the exam room. The alternative, requiring doctors to wear a high-end portable headset could prove expensive and cumbersome. Radiology emerged as an ideal use case, as radiologists usually sit at their desks and write reports while going through scans.

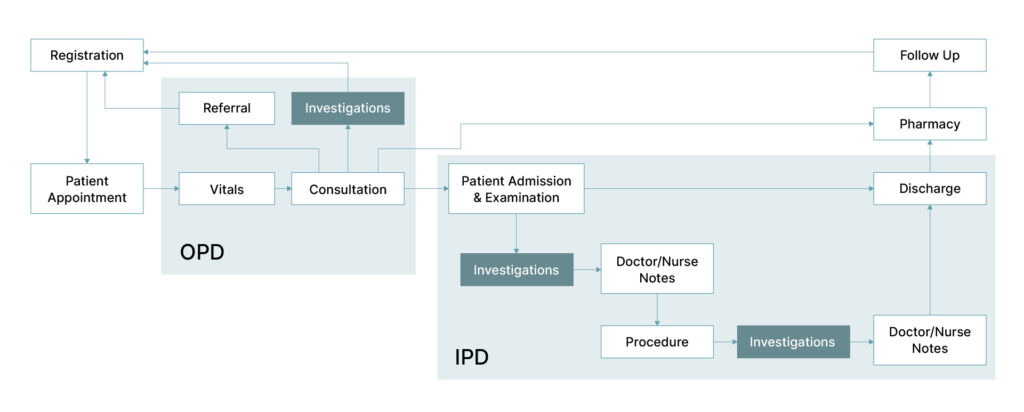

We then analysed documents such as discharge summaries, followed by understanding the patient journey in typical Indian out-patient settings and the types of interactions that take place between doctors and patients. In parallel, we researched the process of creating and storing Electronic Medical Records (EMRs) and plotted the clinical transcription process to understand the journey from interactions to the final investigation report or discharge summary, depending on treatment prescribed.

Our aim was to enable doctors to give their patients as much of their time and attention as possible, as transcription happened effortlessly in the background.

The next step was identifying multiple points where a voice-based system could be beneficial and what supporting features might be useful for physicians, so as to offer an end-to-end solution for generating reports. For example, most doctors used pre-formatted templates, so any software we developed would need to have a section for creating and editing templates.

Automatic speech recognition (ASR) is the technology that converts speech into text. When designing the voice UI, we studied best practices as well as existing research on adjacent technologies, particularly IVR, which has been around for a few decades.

To create a completely voice-controlled UI, app-related tasks and commands also had to be voice-based, so that the user would not have to keep switching between recording voice and using their computer to access the menu. A doctor might want to start a new paragraph or delete a portion of text while dictating, and the system had to be able to differentiate between these states.

Logically, the rules that we follow when designing a purely screen-based UI did not apply here. Augnito was multi-modal, voice-controlled and with a screen component – Because doctors had to see what they had dictated and have the option of on-screen functionality as well.

The mobile version was a standalone app that could be used for recording and text editing. The in-built microphones on smartphones served as the audio source. In the backend, this meant that the software had to transfer audio from the phone to the cloud server and send the transcription to the user’s laptop or desktop. This is similar to how WhatsApp’s QR code connects multiple devices. Each of these interfaces, widgets and various states had to be designed as part of a cohesive design system.

Fun fact, the letter ‘A’ in Augnito’s logo is derived from the shape of the uvula, the teardrop shaped muscle at the back of the throat that enables speech. This turns into a sound wave, and this symbol becomes the app icon.

We developed comprehensive brand guidelines to ensure consistency across every touchpoint—from the product interface and mobile app to sales materials and website. These guidelines detailed everything from logo usage and typography to voice tone and interaction styles, helping the Scribetech team maintain coherence as the product scaled.

This cloud-based program gave doctors more flexibility to work while on the move, and switch effortlessly from their phones to computers. The pandemic hit not long after Augnito’s launch, and lockdowns were a time when telemedicine was often the only option for any non-critical medical treatment. Digital tools like Augnito, that enabled doctors to document-patient interactions remotely and reduced reliance on support staff, saw a dramatic increase in adoption. This project is a great example of how strategic design, thoughtful research, and close collaboration can bring powerful tools to life—especially in high-impact sectors like healthcare.

“It’s rare to work with a team that immerses itself into a project, with such an eye to detail and rigour of the customer centric design process. Innovation is achieved through deep customer insights and empathy and no one does it better than BRND Studio!”

Rustom Lawyer

Co-Founder & CEO, Augnito